Dojo Toolkit Slim Build

Hi everyone 🙂

Well if you ended up here I guess you have been, like me, looking all over the dojo docs, and even the whole internet, to find how to build a small custom dojo build.

I needed that myself for my current project

First of all, my own background is from traditional IT, down to the machine, with compiled languages. So when I hear “build” I understand to get a bunch of files and put them in one unique, nice, compressed, easy to transfer, fast to run, almost magic file.

But that’s not what it means with dojo 😦 I guess that coming from the web, where you have files and requests online all the time, the standard is more to have all your mess on a server somewhere and not worry about it. There fore a “build” is only an optimisation, a way to reduce the mess… that ultimately still remains around. And that’s what happens when you do a standard build with dojo, all files are copied anyway, just in case something would be missing…

- However, when I am building a web-app, I am also thinking of what if :

- my external network goes down, and I still need to access all my resources.

- I want to port it to another platform

- I want to be able to have everything offline on a USB disk and I need it to work even if I am not connected

- etc.

And for all these reasons, what I want is only one “mydojobuild.js” file. But sadly very few docs on the web try to achieve that goal.

- After a few days of trying a bit everything, here is what I ended up with :

- a built “dojo.js”

- a few “nls/nls_<locale>.js”

🙂

-

First a few things that are quite usefu to know, but it took me some time to understand :

- The command line options for the build can also be included in the profile file. Pretty useful if you want to script the whole process like I do.

- Even though your profile specifies only one layer to be built, the dojo build system will always build at least a dojo/dojo.js (and usually also a dijit/dijit.js and a dojox/dojox.js I think – even if you don’t care about it) anyway. So if you want all your dependencies in ~*one file*~, you have to put them in dojo/dojo.js.

And here is how my build profile looks like :

//http://docs.dojocampus.org/build/buildScript

dependencies = {

stripConsole: "normal",

action: "release",

mini: true,

optimize: "shrinksafe",

layerOptimize: "shrinksafe",

releaseName: "my_dojo_build",

// list of locales we want to expose

localeList: "en-gb,en-us,fr-fr",

layers: [

//Custom build of the mandatory dojo.js layer

{

name: "dojo.js",

customBase: true,

dependencies: [

"dojo.i18n",

"dijit.Dialog",

"dijit.form.Form",

"dijit.form.Button",

"dijit.form.ValidationTextBox",

]

}],

prefixes: [

[ "dijit", "../dijit" ],

["dojox", "../dojox"]

]

}

Pretty small and maintainable 🙂

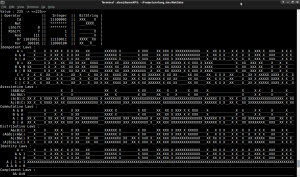

I build it with :

cd dojo/util/buildscripts && \

sh build.sh profileFile="path_to_my_profile" log=WARN version=1.6.1

Then I only need to get the dojo/dojo.js file, as well as the dojo/nls/dojo_* for localization.

And because, believe it or not, I test my application before deploying it ;), so if I miss a dependency from dojo, I will see it and fix the build profile. I don’t need all the files that the dojo build put around (but I didn’t find any option to disable this step in the build yet). Ah and just in case you are wondering, yes localization files are separated and will remain out of the dojo.js because if I am speaking french I don’t want to load all languages at runtime…

I am now pretty happy with my solution, as I can run the build and extract what I need with a simple waf script, in the configure method, such as:

#finding dojo mandatory layer

dojobaselayer = dojobuildnode.find_node("dojo/dojo.js")

if dojorelnode is None :

conf.fatal("dojo/dojo.js mandatory base layer was not found " + \

"in build directory. Cannot continue.")

dojobaselayer_uc = dojobuildnode.find_node("dojo/dojo.js.uncompressed.js")

if dojobaselayer_uc is None :

conf.fatal("dojo/dojo.js.uncompressed.js base layer was not found " + \

"in build directory. Cannot continue.")

# Finding the scripts folder where to put build results

scriptsnode = conf.path.find_dir('htdocs/scripts')

if scriptsnode is None :

conf.fatal("htdocs/scripts/ subfolder was not found. Cannot continue.")

#copying mandatory dojo layer

conf.start_msg("Extracting Dojo built layer" )

shutil.copy(dojobaselayer.abspath(),scriptsnode.abspath())

conf.end_msg( scriptsnode.find_node(

os.path.basename(dojobaselayer.abspath())

).relpath())

conf.start_msg("Extracting Dojo built layer - uncompressed" )

shutil.copy(dojobaselayer_uc.abspath(),scriptsnode.abspath())

conf.end_msg( scriptsnode.find_node(

os.path.basename(dojobaselayer_uc.abspath())

).relpath())

#extracting localization resources

dojonls = dojobuildnode.find_node("dojo/nls")

if dojonls is None :

conf.fatal("dojo/nls for mandatory base layer was not found " + \

"in build directory. Cannot continue.")

conf.start_msg( "Extracting Localization resources ")

scriptsdnlsnode = scriptsnode.make_node("nls")

scriptsdnlsnode.mkdir()

#copying localization resources from the build

#excluding default copied file by dojo build process

for fname in dojonls.ant_glob("dojo_*") :

shutil.copy( fname.abspath(), scriptsdnlsnode.abspath())

conf.end_msg( scriptsdnlsnode.relpath() )

DISCLAIMER : I cannot guarantee that the script up there will work. It’s python, and I had to re-indent it to fit in here… So you might have some quick easy syntax fix to do if you copy it.

Well that’s it for now. Dojo is pretty slim and I am happy as it s not so heavy to move around any more.

-

Two things I still need to investigate:

- How to embed dijit themes in a build. That should be possible, as I saw some reference one the web, but no example so far… So if you have a clue, or any question, just let me know 😉

- How to rename the dojo.js file and the matching pathname in js code. That should be possible with the scopeMap option, that can map dojo.* to mycustomname.* However I ll need a lot more tests to make that work properly I believe

And now you can go explain to your dojo build how to go on diet 😉

Some experimental geek time again…

Hey everyone,

Since I was quite busy these last month working on Toonracer at BigPoint I didnt get time to work on my personal projects much…

I am also still working on FDPoker with the fairydwarves available on the chrome store , and wondering how make a little bit of money from it, in order to get enough time to improve it.

And as I am looking for my next assignment, job, or contract, I recently experimented gradle and webapp deployment in tomcat. In two days I had a basic build and deployment process running, which indicates to me that gradle is a good candidate as a build system if I ever do any java again. I had a quick look at groovy, but the fact that many things are implicit ( or is it only gradle ) is still quite disturbing to me, as it means you need to understand everything before you look at some code. I prefer when it s the other way : you look at the code first, and then from the code you can understand how things work.

And for Java, I still think that either you do something properly in C++ and it runs everywhere you test it, or if you want really scalability and lots of other nice features, you’d go for erlang for back end, or web based for front end, since it seems to me that the future is that way.

While I have been experimenting with javascript to make a poker game I came to the conclusion that OOProgramming is useful, at low level. When you do functional or higher level programming, you need to organise your code differently. Javascript being functional, I will need to spend time to rewrite the Poker code that I wrote in an imperative style ( as I was used to on C++ ).

I am also wondering if prototyping things with Node.js could be a good option for later erlang programming ? maybe the way python is a good prototyping technology candidate for C++ project, as you can migrate from one tech to the other step by step…

So as I am still interested by Distributed Game Engine Designs, I am thinking that designing a quick javascript game engine on client side first could be a good step towards that goal. It will also be used in small web games, to keep it active and maintained. Then some parts could be move to a Node.js based backend. Experimenting with this system could be a good way to get a better understanding on how to design and write a distributed game engine, at a relatively low cost ( given the size of the project). It would also be functional so we can hope to make it scalable easily by switching to erlang later on when needed ( erlang-js could also help there ).

I am at the moment working on FDPoker, understanding what makes code maintainable and yet efficient in javascript.

As you can see all the display is there and input is also working in different browser and web engines, on different platforms.

We are primarily using the dojo toolkit and dojox.gfx so we dont worry about implementing for different browser, versions of web standards, etc.

And while we dont have time to test a lot of different platforms, we were developing the engine to run on top of the EFL libraries.

It has been working ok so far for us, but on our platform for deployment ( Freebox ) it was tricky to get things right… And It seems they had enough problems to justify dropping the support of the EFL in the last version of the Freebox… but anyway no matter where that project goes, by building a solution on top of javascript we should always be able to run on most platforms.

However this little javascript engine is not very reactive yet and supports only gui-based interaction. As soon as we can get some income, then we will turn it into a full 2D game engine, client-side, in javascript. With similar features than the one in Project0 (which is based on SDL).

The more money can be made with FDPoker, the more nice features could be developed in the next javascript engine, preparing for the next game 🙂

So if you want to help fairydwarves making better games, please do not hesitate to tell us you love us 🙂

Extending std::ostream HOWTO

Extending std::ostream – HOWTO

First things to know.

- All basic_* classes are actually the template version of the usual classes. So it’s simpler to work with specialized classes for early development.

That is instead of working with the class std::basic_ostream<<class _CharT, class _Traits> works with std::ostream.

It’s actually just because of typedef basic_ostream<char, char_traits<char> > ostream; - Streams are there to hide actual implementation of communicating with the outside world, and separate communication from the logic of your application. These type of communication requires buffer for decent performance, and these are implemented in streambuf’s children classes. Decent STL implementations should provide you with stringbuf and filebuf as a minimum.

Therefore we could represent dataflows like this :

App ==>> ostream -->> ostreambuf --- ES

ES --- istreambuf -->> istream ==>> App

ES stands here for external systems, whether it is the OS, or any other

system you need to connect to without bothering about connection intricacies

Design choice

For simplicity sake, we will work while considering a very simple usecase :

MyOStreamBuf sb; MyOstream mos(&sb); int value = 42; mos << "value :" << value << std::endl;

The simplest way to extend a stream usuallty is to extend streambuf with

“mystreambuf” and provide a stream extension “mystream” to work on it the same

way that ostream works on ostreambuf. This way the user of mystream will use it

as he could use std::ostream, and the implementation details will be hidden.

Streambuf Extension : What is needed ?

There is actually very little required to extend std::ostreambuf. Here is an

exemple with a nullstream implementation (template version), which does

nothing.

streambuf::overflow is called whenever the buffer is full and action needs to

be taken to enable more input. Depending on the kind of buffer we are

manipulating, this can mean flushing, extending buffer memory, etc.

It is expected that any class implementing streambuf will overload

streambuf::overflow(typename trais::int_type c)

Other usually overloaded functions, when writing streambuf implementations are streambuf protected virtual function. it is advised not to overload any public function, as they guaranty the proper behaviour of std::streambuf when used by a std::stream. In their specialized version :

- virtual std::streamsize xsputn ( const char * s, std::streamsize n ); is called whenever a character needs to be written into the std::streambuf via std::streambuf::sputn(), called from ostream& operator<<(ostream& , Type inst). Special formatting can be done here.

The return status is expected to be n. If it is 0 the stream using that buffer will set ios::failbit to true, and prevent any more use of that stream. - virtual int sync (); is called whenever the buffer needs to be syncrhonised with the ES, usually via std::ostreambuf::pubsync() called from std::ostream::flush() (called by manipulator std::endl for exemple)

On the use case :

(mos << "value :" ) // calls xsputn(), ( or overflow() if buffer is full) << value //calls xsputn(), //note the formatting from int to char* has been made by operator<< << std::endl; //calls overflow() and then flush()

Stream Extension : Wrapping My streambuf extension

Here is an exemple with a nullstream implementation. Watch how the constructor needs to call init() on the buffer type, to bind the stream to it.

- An explicit constructor of stream can also be created and take as parameter the proper streambuf type. In the specialized version :

explicit Mystream(Mystreambuf* lsb); - It is good to overload also Mystreambuf* rdbuf() const to return the actual Mystreambuf type instead of the original std::streambuf

- For the same reason, you should also overload :

//Put character (public member function)

Mystream& put ( char c );

//Write block of data (public member function)

Mystream& write ( const char* s , std::streamsize n );

//Flush output stream buffer (public member function)

Mystream& flush ( );

Conclusion

You should now be able to write your own streambuf implementation, and a stream to allow the use of operator<< for all basic types, as well as all the standard manipulators defined in STL. And this without coding any operator<< function or manipulator.

std::ostreambuf is extendable quite simply. It is possible but more complicated to extend std::ostream, as it would probably require rewriting a lot of code. Therefore if an extended feature can be put into streambuf, it seems much simpler to do so.

This has been used in the AML project to write logstreambuf and logstream. At the time of writing the development of the Logging feature is still going on…

Busy C++ coding and testing…

I have been pretty busy lately, mostly with AML. Now that most features are there, and that a stable structure is emerging, the main goal is to write a full test suite, and improve code quality and documentation. This way we should be able to spot all differences introduced by new code very quickly. And also the project being multiplatform, it will be tremendously usefull to spot differences between different platforms easily.

But a clever complete test suite for a “driver-output based software” (understand : a software which main job is to output things on hardware through driver) is very difficult to make. To be able to automate such a test suite, you also need to get back the information you just outputted to check it. I started doing that for images, but I don’t have much idea yet about how I can implement tests about keyboard input, joypad, or font rendering for exemple… audio anyone ?

And this is actually much more work than I anticipated. But it will save even more later down the road…

We decided not to use cppunit just yet, because it would be one more dependency and one more library to learn. Better to focus on the tests themselves, we can always start using cppunit later.

I recently have been working on a logger for the Core part of AML. The main features were :

- use of log level filtering of message

- use of stream operator seamlessly

- insertion of custom prefix

- insertion of date, threadID (later), and other useful information to identify future issues

- default output to clog (console)

- optional output to file

- being able to extend the log system to use new outputs (syslog, win32dbg, etc.)

So I decided to extend the ostream class from STL. But to do this without having to overload each operator<< it was much better to extend streambuf as well. However I ended up to extend stringbuf directly, as I wanted an stringstream-like input buffer, and on sync() copy everything to the streambuf chosen as sink. Not an easy work at first, because it can depend on your STL implementation. But once you figure out how it all works, then things become easy 😉 For exemple just to derivate a streambuf that just does nothing :

template <class cT, class traits = std::char_traits<cT> >

class basic_nullstreambuf: public std::basic_streambuf<cT, traits>

{

public:

basic_nullstreambuf();

~basic_nullstreambuf();

typename traits::int_type overflow(typename traits::int_type c)

{

return traits::not_eof(c); // indicate success

}

};

template <class _CharT, class _Traits>

basic_nullstreambuf<_CharT, _Traits>::basic_nullstreambuf()

: std::basic_streambuf<_CharT, _Traits>()

{}

template <class _CharT, class _Traits>

basic_nullstreambuf<_CharT, _Traits>::~basic_nullstreambuf()

{}

template <class cT, class traits = std::char_traits<cT> >

class basic_onullstream: public std::basic_ostream<cT, traits> {

public:

basic_onullstream():

std::basic_ios<cT, traits>(),

std::basic_ostream<cT, traits>(0),

m_sbuf()

{

init(&m_sbuf);

}

private:

basic_nullstreambuf<cT, traits> m_sbuf;

};

typedef basic_onullstream<char> onullstream;

typedef basic_onullstream<wchar_t> wonullstream;

Since AML, is aiming at being completely cross-platform, and that I started playing quite a lot with STL ( with the logger, but also with the delegate implementation written some time ago), I decided to use STLport. I am quite happy with it, as it comes along with extensive test code, and works fine on most platform. But sadly it lacks some documentation, help or activity around the community.

But to be honest I am actually quite impressed ( even myself ) at how easy it can be to add a new dependency check into wkcmake. Drop a usual FindModule.cmake in the WkCMake’s Modules (if it’s not already in CMake’s Modules ), add 3 lines to the WkDepends wrapper, one line to WkPlatform header, and that’s it. Next time you run cmake on your project you can see the detected includes and libraries for the new Module, and generate your project. I just did it for STLport on AML in a few minutes.

I am hoping I can get something stable for AML’s Core quickly, so I can start focusing on the network part, probably using ZeroMQ. Because multi player games are just much more fun, and after all, proper distributed simulation is what the project is also about since the beginning…

Distributed Design… MOM ?

I have been in the past few weeks looking more deeply into my distributed software project, writing quite a bit of code for the logic of the software itself. I was trying to satisfy my first requirement : keeping track of events causality (with a simple vector clock for a start). However when I came about to write the interface with other instances, I found myself like stuck in mud, trying to write something I didnt plan for… I have been thinking in the abstract world, with bubbles and arrows, but when you come down to the wire, you have to send bits through the pipe… So just while I was struggling to find a good solution to send a message efficiently to multiple peers ( multicast, multi unicast, etc…) , I realized that I could use a Message Oriented Middleware…

I went to search into these type of technology, to find mostly AMQP. Other messaging middleware exists, starting with CORBA, some more open than others. One worth noting though is ZeroMQ, mostly because, being brokerless, it s very different from AMQP.

RabbitMQ seems to be also a very interesting implementation of AMQP, especially because of its use of erlang to achieve high availability and fault tolerance.

While many people seems to think that the slowness of the early HLA RTI implementation could be blamed on CORBA, it certainly provided a very handy fundation to build the future IEEE 1516 standard on top of it. Therefore I think that, following the path of reusing the wheel that has already been done, I should use a MOM.

I will give it a go, and rethink my previous layered architecture to :

– RabbitMQ or ZeroMQ ( which greatly reduce the scope of my work, yey ! )

– Event Causality Tracking ( my main focus right now )

– Distributed Data Interpolation ( Basic dead reckoning algorithm )

– Game Engine Layer (Time keeping, space maintenance, etc.)

Comparing ZeroMQ and RabbitMQ, I think I ll start with RabbitMQ, because it provides already good reliability. ZeroMQ is more flexible, but it might be also a bit trickier to grasp at first. And well, I started prototyping my code in erlang, so I might as well go erlang all the way there. More details in a future post, when I get some time to write some more code.

Random Stuff

During last month, I kept improving some code on my little cross-platform graphic library. New design is now in place to support all different pixel format in opengl ( except palette ) the same way than in SDL. It was a fair bit of work, and a lot of test still needs to be written.

I also found something interesting : the FAWN project.

I have been thinking about that concept since I know about “One-Board PCs” and clusters… But I didnt want to spend so much time and money before knowing if it would be useful or not. Well some answer is in their paper, but I still wonder if research is going on there or not. It seems pretty interesting so far, but I am really wondering about hot-swapping nodes…

For those interested, they are selling their cluster on ebay

On the downside I also learned about ACTA… Sometimes I am saddened by the dedication mankind can put to destroy itself, just for the blind profit of some…

SDL, OpenGL, PixelFormat and Textures

Between SDL and OpenGL, both have different ways of storing the Color of a pixel. SDL uses its PixelFormat as a structure providing information on how color information is stored, while OpenGL uses a list possible internal texture format when you create a new texture with glTexImage2D.

I struggled a little before being able to find a proper way to convert a SDL_Surface to an OpenGL texture recently for my graphic library, so I decided to post my findings here, because I couldn’t find any exhaustive information on the web easily… I will not talk about the uncommon palette image with transparency yet, or other 8bits displays 😉 Even nowadays with quite standard configuration there are some issues, and I will talk here only about displaying true color images on true color displays. If your image is not true color (that is pixel color information takes less than 24 bits ) you can always convert it externally as a data, or in your program.

- First of all, if you want to care about OpenGL implementation earlier than 2.0, you need to convert your texture to a “power of two” size. that is width and height of 32 or 64, 128, 256, etc. To achieve that, in SDL, I create a new image with the same flags, and blit the old surface on the newly created one. If you care about transparency, you need to transfer colorkey and surface alpha values to the new surface before blitting, so that everything is blitted.

- Then you have to convert your SDL_Surface to the proper format before using it as a texture. As your display will probably be true color if you are using OpenGL, you can use SDL_DisplayFormat to convert your surface to a > 24bits format. It seems possible to display palletized texture with OpenGL, but if you are not using a great amount of it, such a complicated technique can seem overkill. Also if you have a ColorKey on your surface you need to use SDL_DisplayFormatAlpha, so that the pixel with colorkey value will be converted to alpha (full transparent) on the new surface.

- You need to find the proper texture format. Also there is an issue with the colorkey, which can sometime generate an alpha channel that you shouldn’t use (shown in SDL_PixelFormat with Aloss == 8). Here is the code I am using ( on a little endian machine )

if (surface->format->BytesPerPixel == 4) // contains an alpha channel

{

if (surface->format->Rshift == 24 && surface->format->Aloss == 0 ) textureFormat = GL_ABGR_EXT;

else if ( surface->format->Rshift == 16 && surface->format->Aloss == 8 ) textureFormat = GL_BGRA;

else if ( surface->format->Rshift == 16 && surface->format->Ashift == 24 ) textureFormat = GL_BGRA;

else if ( surface->format->Rshift == 0 && surface->format->Ashift == 24 ) textureFormat = GL_RGBA;

else throw std::logic_error("Pixel Format not recognized for GL display");

}

else if (numbytes == 3) // no alpha channel

{

if (surface->format->Rshift == 16 ) textureFormat = GL_BGR;

else if ( surface->format->Rshift == 0 ) textureFormat = GL_RGB;

else throw std::logic_error("Pixel Format not recognized for GL display");

}

else throw std::logic_error("Pixel Format not recognized for GL display");

} - Once all this is done you can load your texture as usual with OpenGL, and everything should work 😉

glPixelStorei(GL_UNPACK_ALIGNMENT,ptm_surf->format->BytesPerPixel);

glGenTextures(1, &textureHandle);

glBindTexture(GL_TEXTURE_2D, textureHandle);

glTexImage2D(GL_TEXTURE_2D, 0, ptm_surf->format->BytesPerPixel, ptm_surf->w, ptm_surf->h, 0, textureFormat, GL_UNSIGNED_BYTE, ptm_surf->pixels);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

I hope this little post will help those, like me, who like SDL and OpenGL, but have trouble mixing them. The code is available in my opensource graphic library, under heavey development at the moment. Our goal is pretty big, and help, in any way, is very welcome. Dont hesitate to contact us if you want to get involved 😉

And for the experts out there, let me know if you spot some errors in here.

Back to coding…

Erlang Bitstring Operators

After looking more deeply into what does a DHT like Kademlia does or not, I started to write some useful code in Erlang…

First it seems ( quite intuitive I must say ) that a DHT doesn’t say anything about connections… That is who am I connecting to at first, how to choose my endpoint, etc. Everything that is not enforced by the DHT mechanism is an opportunity to tune the system towards more special needs and features other than the DHT possibility set. Therefore I am making the intuitive assumption that a good way would be to connect to a very close node. BATMAN has an interesting and quite simple way of handling connection, so I decided to follow the example 😉

I just finished a UDP broadcaster in erlang, pretty simple, that basically advertise to his online neighbors itself came online, and register its neighbors’ replies.

Then comparing This and That I got a bit confused about some stuff…

For example : nodeID seems to be up to the user, provided a few conditions… is it really ?

But anyway it is sure that the nodeID is going to be a long binary. So I decided to start implementing a key scheme for what I had in mind for the dynamic vector clocks algorithms, that is a key based on the order of connection of the different node.

However, I was quite disappointed to *not find* any simple way to deal with bitstring operations in erlang… Binary operations work on integer only, and that would be 8bits for convenience with bitstring apparently… so I started writing my own module for that, with bs_and, bs_or, bs_not, and so on, that work on a bitstring of indefinite size. It s pretty basic and not optimized at all, but it works. Not sure if there is some interest out there for it, but let me know if there is, I can always put it on github somewhere 😉

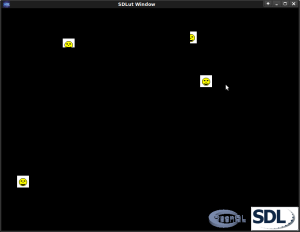

Other than that, I keep working on my little portable SDL-based Game Engine, which now has OpenGL enabled by default if available on your machine.

It s working pretty nicely for simple bitmap display. Now the refresh is optimized ( I should display fps up there 🙂 ), and the user doesnt have to manage the list of rectangles to know what has changed on the screen or not ( which wasnt supported before in SDL render mode). Also openGL can be disabled if not needed, nothing changes on the interface for the user 🙂 pretty handy 😀 That was some work, but now the most troubling point is the “Font” part, that doesnt behave exaclyt as you would expect… more work to be done there.

Towards a beginning of a design ?

I have been thinking for a very long time over this, gathering research papers, and browsing Internet to look for a possible way to implement what I was thinking of… If I wanted to name it, it would be something like : A Decentralized Distributed MMO(or not) Game(or more serious) Engine.

The idea seems simple, and quite intuitive, however one needs to be aware of the Fallacies of Distributed Computing

Here is what we have, from an very abstract and high point of view : Computers, and links between them.

Here is what we want to do with it : Store Data, Send Messages, Process Instructions

And we can see the problems we will have to face : data might not be available, data might be corrupt, message can disappear, messages can be in the wrong order, links can disappear, Network Topology can change, etc.

Here is what I want :

– No central point

– distributed topology, with node joining or leaving anytime

– resilient even if one node or link fails, or get out of the system at an unexpected moment. No data get lost, no connection gets broken.

– good performance ( real-time like would be great )

The trade-off between performance and resilience is pretty difficult to manage. Trying to build one on top of the other, which one would you start with first ?

Although many systems try to solve or alleviate one of these problems, none of them as far as I am aware, can deal with all of them while maintaining a decent performance. I thought after having a look at a few research proposal that one solution for one problem would be really interesting to implement, however, after trying it, I realized how important it was for some foundation to be laid down first. I made some small development in erlang, and quickly wondered how I could structure my software, given all the components that I would need to satisfy all the features I thought of… I wrote some of them, while others would have required much more expertise than my own to work. So I need to heavily reuse what has already been done to make my task a bit easier if I ever want to achieve my goal.

After all there is the “Researcher way”, who is an expert in his field, and can have enough funding to spend a lot of time developing one system, until it becomes as good as it can, in theory. And there is the “Entrepreneur way”, who has to make something working quickly, no matter how dirty and partially done it can be, with everything he can find, provided that people are interested and will sustain him to improve the system along the way…

Even if I am still tempted by the first way, I am no longer a student, nor seem to be able to secure any funding at the moment, and I therefore have to take the second path.

So I should :

– reuse what is already working elsewhere: DHT – p2p data sharing

– make something interesting out of the system ??? we ll see, depending on what it can do… probably trying to use it with my little open-source game engine

– plan for re-usability : structure the project, documents the different parts separately

– plan for improvements later on : divide to conquer, and specify interfaces between blocks

That why I decided on a basic layer architecture for a start :

– Implicit connection to the p2p network.

– DHT to keep “IP – nodeID” pairs distributively mostly, and other “global state data”…

– Routing Algorithm

– SCRIBE-like – manage groups and multicast

– Message Transport protocol ( overlay UDT ? or direct SCTP – DCCP ? depending needs and performances… )

– Causality algorithms (Interval Tree Clock – like), which might need multicast for optimization, when there is no central system, depending on the type of implementation probably…

– Game Engine Layer, able to send state updates efficiently, with proper ordering, to a set of selected peers.

The choice to based the design on a DHT is, I think, the best for me. Despite my interest in AdHoc Networking protocols, and how much I would like to implement them on top of IP to get an increased fault tolerant network, I am not a Networks’ Algorithms Expert, and it would take me far too long to get to something decent working. Also DHT have now be quite extensively studied, and some implementation exists and are very usable, which enables me to reuse them, so I can focus on something else. Some improvements are likely to emerge in the years to come, and by using something already known, it will be easier to integrate evolutions.

Depending on which implementation I choose, I will have to check which features are available out of the box, and which one I will need to implement on top of it, to reach the feature set I want. Kademlia seems to be the more mature DHT algorithm from what I could gather around internet, but I will need to look at it more deeply. SCRIBE was implemented on top of the Pastry algorithm and I would need to reimplement it on top of Kademlia, as I didnt find any similar attempt…

Not an easy task but definitely an interesting research process 😉 Let s hope there will be something worth it at the end of the road.

Peer-to-peer distributed, existing systems

Looking at GNU Social which is likely to be centralized, sadly, I found a list of other projects, much more distributed, that raised interests, and I should have a deeper look at them soon… Most of them concern file-sharing, but not only…

The Circle is a peer-to-peer distributed file system written mainly in Python. It is based on the Chord distributed hash table (DHT).

> But too bad : Development on the Circle has ceased in 2004. However the source is still available 😉

CSpace provides a platform for secure, decentralized, user-to-user communication over the internet. The driving idea behind the CSpace platform is to provide a connect(user,service) primitive, similar to the sockets API connect(ip,port). Applications built on top of CSpace can simply invoke connect(user,service) to establish a connection.

> That is pretty similar to what I want to achieve with my current developments, but if the “user view” will be similar, the intricacies will be quite different…

Tahoe-LAFS is a secure, decentralized, data store. All of the source code is available under a choice of two Free Software, Open Source licences. This filesystem is encrypted and spread over multiple peers in such a way that it remains available even when some of the peers are unavailable, malfunctioning, or malicious.

> Yeah so thats done. At least there is something I will not try to do 🙂 Still need to test it though…

GNUnet is a framework for secure peer-to-peer networking that does not use any centralized or otherwise trusted services. A first service implemented on top of the networking layer allows anonymous censorship-resistant file-sharing. Anonymity is provided by making messages originating from a peer indistinguishable from messages that the peer is routing. All peers act as routers and use link-encrypted connections with stable bandwidth utilization to communicate with each other. GNUnet uses a simple, excess-based economic model to allocate resources. Peers in GNUnet monitor each others behavior with respect to resource usage; peers that contribute to the network are rewarded with better service.

> Too bad they focus only on file sharing…

The ANGEL APPLICATION (a subproject of MISSION ETERNITY) aims to minimize, and ideally eliminate, the administrative and material costs of backing up. It does so by providing a peer-to-peer/social storage infrastructure where people collaborate to back up each other’s data. Its goals are (in order of descending relevance to this project)

> File sharing…

You can call Netsukuku a “scalable ad-hoc network architecture for cheap self-configuring Internets”. Scalable ad-hoc network architectures give the possibility to build and sustain a network as large as the Internet without any manual intervention. Netsukuku adopts a modified distance vector routing mechanism that is well integrated in different layers of its hierarchical network topology.

> Ad-Hoc alternative network 🙂 interesting… I still want to use internet though…

Syndie is an open source system for operating distributed forums (Why would you use Syndie?), offering a secure and consistent interface to various anonymous and non-anonymous content networks.

> Only forums… mmm…

I also found a blog that seems interesting although I am pretty sure it is mostly Amazon centric : AllThingsDistributed.com

Might be worth to have a deeper look at to see what the big companies are coming up with…